How to distinguish AI-generated text, consistently.

Credit to Jenna Russell, Marzena Karpinska, and Mohit Iyyer.

In the age of LLMs running rampant in the education sector and forcing the whole system into making rash decisions about navigating AI usage (trust me, my university has no idea what it’s doing) it is more important than ever to be able to distinguish between human and AI writing pieces.

As ChatGPT is known to “hallucinate” facts with false yet confident retelling of events it is easy to fall for misinformation on the internet being factory produced by these neural networks. And also generally, we want people’s personalities to be preserved when reading their writing rather than seeing the same sentence structures constantly being reused erasing any semblance of originality.

Our best bet up so far has been running AI’s sycophantic ramblings through another AI to check whether the AI text has been written by AI (six instances of this word so far, make sure to ctrl + f for the full count) or not. However this method has shown to be very inconsistent over a large number of writings, often flagging human text as AI-generated and being quite easily tricked with manipulation of the prompts. This is what inspired Russell J., Kaprinska M., Ivyer M. (2025) to launch an experiment in which five frequent users of LLMs have been tasked with identifying AI-generated texts, and see if they are able to outperform the detection of publicly available tools.

Their insight is what inspired me to write this article. I will be going over their comments and results, but if you are interested I heavily suggest you read the paper as it is a relatively easy read for scientific standards. It’s only about 10 pages long (excluding the 20 page appendix)! But first let’s see what the actual experiment yielded.

Results of the experiment.

I don’t talk to ChatGPT much anymore (long story), and I can proudly say that I have not used it for the process of creating and editing my own writing, and yet I still feel like I’m pretty good with recognizing AI text. You see, I operate on the highly intelligent principle of vibes - if the article feels corny it was probably written by GPT.

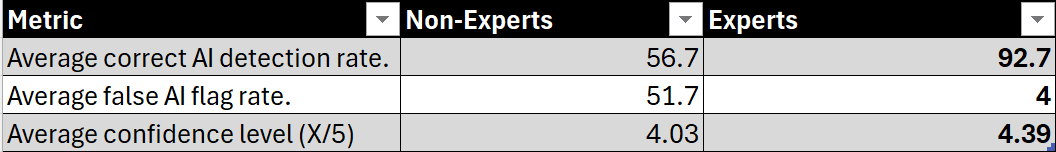

That tracks with the results of the study in which the “non-expert” group of participants who have all reported not using any LLMs for writing purposes actually heavily overestimate their ability to distinguish between the human and AI text. To be specific, when presented with 60 texts (30 ai, 30 man-made) and asked to sort into two categories the average confidence rating of all decisions made clocked in at 4.03/5, about 80%. The results however, reflected more of a random chance at categorization than anything.

To be exact - 56.7% of AI-generated articles were detected correctly, and 51.7% of human articles falsely accused of being AI. I mean that’s not too bad, right? These people are the ones who don’t use AI for their writing, so let’s check on the “experts” who work closely with LLMs for their occupations or study. What does the table on Figure 1 tell us?

The experts detected the AI text correctly an astounding 93% of the time, and have only false-flagged man made text 4% of the time across 300 different non-fiction articles. In fact, by majority vote 299 out of 300 articles were correctly categorized as either AI or human by these guys. This was even the case with additional instructions for the LLMs to “paraphrase” or “humanize” the text, more details of which you can find in the paper.

The expert participants were instructed to leave a short paragraph-long comment explaining their decision, which yielded us a goldmine of helpful advice on how to be more like them - and less like random number generators.

The five features of AI-generated text, and common misconceptions.

In total, there were about 15 different distinguishing categories the experts looked for when they were analyzing the articles for potential AI intervention. For the sake of your time and to not spoil the study entirely, I will only be going into five major ones.

It is very important to understand that while some of these categories are a solid way to tell the difference between AI and man-made text you need to analyze a variety of them together in order to achieve the most accurate result. Otherwise a lot of text can be falsely flagged as it falls under two or three red-flag distinguishing categories on the list (including the article you are reading). As with everything, it’s best to take your time and recognize nuance when using these to analyze a piece of writing.

Vocabulary - 53.1%

Appearing in more than half of the comments left by the experts the vocabulary of the given texts is a major tell of AI’s involvement in a certain text. A common misconception of this is the idea that “fancy” language being used in a not-so formal context (in this case, all of the texts presented were non-fiction articles with a 1k word limit) or simply low-frequency word types is a sign of LLM involvement. This can result in a lot of false alarms being rung, as in reality there are a lot of exact words and phrases that are overused by AI (delve, crucial, additionally, powerful, etc.) that experts can recognize, which would potentially bring attention to other things raising suspicions in the text. This category also includes certain phrases that ChatGPT likes to use; phrases like “when it comes to the topic”, “it’s crucial to”, “it’s important to note” are very common amongst AI-generated text.

In order to move on to the second stage of the experiment the researchers have compiled a list of “AI Vocabulary”. For them it was used to blacklist said words and make the experts’ lives harder when detecting LLM text, but we can use it as cautionary terms which may help us in our task of detection. The full list is attached below:

Nouns: aspect, challenges, climate, community, component, development, dreams,

environment, exploration, grand scheme, health, hidden, importance, landscape,

life, manifold, multifaceted, nuance, possibilities, professional, quest, realm, revolution, roadmap, role, significance, tapestry, testament, toolkit, whimsy

Verbs: capturing, change, consider, delve/dive into, elevate, embrace, empower,

enact, enhance, engage, ensure, evoking, evolving, explore, fostering, guiding,

harness, highlights, improve, integrate, intricate, jeopardizing, journey,

navigating, navigate, notes, offering, partaking, resonate, revolutionize, shape, seamlessly, support, tailor, transcend, underscores, understanding

Adjectives: authentic, complex, comprehensive, crafted, creative, critical,

crucial, curated, deeper, diverse, elegant, essential, groundbreaking, key,

meaningful, paramount, pivotal, powerful, profound, quirky, robust, seamless,

significant, straightforward, structured, sustainable, transformative, valuable, vast, vibrant, vivid, whimsical

Adverbs: additionally, aptly, creatively, moreover, successfully

Phrases: as we [verb] the topic, cautionary tale, connect with, has shaped the,

in a world of/where, in conclusion, in summary, it’s crucial to, it’s important

to note, it’s not about ___ it’s about ___, manage topic issues/problems, not

only ___ but also, packs a punch/brings a punch, paving the way, personal growth, quality of life, remember that, simple yet ___, step-by-step, such as, the effects of, the rise of, their understanding of, they identified patterns, to form the, to mitigate the risk, weaving, when it comes to topic

Sentence structure - 35.9%

A third of the comments made by experts have indicated a part of the sentence structure to be an influence in their decision. It has been found that public LLMs, specifically GPT-4o follow a certain structure that isn’t broken unless specifically instructed not to.

AI sentences often follow a complex sentence structure, with multiple dependent and independent clauses. On the contrary - human writing contains a mixture of simple, complex, and compound sentences all throughout the paragraphs. Human writing has a lot of variation in terms of length and formation of the sentence, making it feel more engaging and interesting to read as a result. Although it is important to note that more formal writing style has a specific sentence structure that is best to follow - but we are testing for general articles here, nothing too fancy.

Some specific examples of this include listing no more than three items in a sentence, “not only is it … , it’s …!”, and “it’s important to understand that …”. Unfortunately, there isn’t a big list of structures like with the vocabulary section that we can look to for help so I guess this understanding would come from long-term observation.

Wait, how many specific examples did I list again?

Grammar & Punctuation - 24.8%

A quarter of comments were referencing grammar & punctuation as a potential flag to look out for when identifying AI. This category is probably the most context-dependent on the style of the text you are attempting to identify and can result in false positives if not used correctly.

AI employs grammatically perfect language, and attempts to avoid dashes and ellipses as much as possible. Human writing on the other hand tends to contain very minor errors, as well as a lot of variety of brackets, quotes, syntax, dashes intermixed with sentences and short spurts of common sections throughout. Humans generally adhere less strictly to English grammar & punctuation rules in order to get their point across. AI writes very formally unless told otherwise.

Sometimes humans may write something that confuses in text, but if told verbally in a conversation would makes perfect sense, ya feel me?

Originality - 23.7%

Honestly a lot of the time you won’t even need these tips to recognize AI writing - five minutes into reading an article you would recognize it as a total drag.

Text generated by AI is very “safe”, and as we’ve previously observed; predictable. It lacks in surprises or humor, leaving the reader often disengaged and bored. Of course this is quite difficult to assess and will vary person-to-person, but AI will always stick to an “obvious” way to answer a prompt lacking originality. As it can’t think for itself, it will be unable to incorporate twists, unexpected insights, and humor that a human writer with minimal experience can.

Although some of the comments left by the experts on this category make me think that they themselves are partially AI (Figure 2).

Conclusions - 13.1%

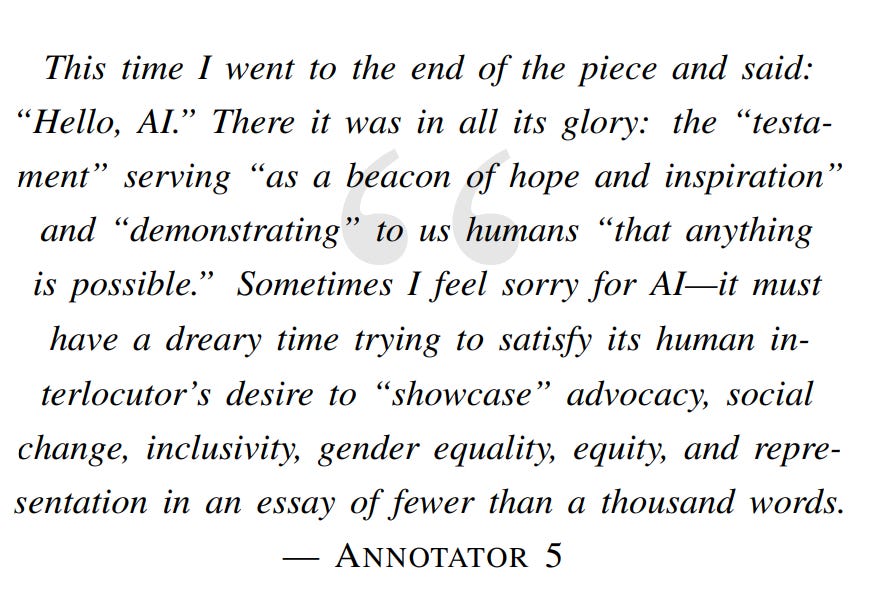

AI-generated conclusions tend to be repetitive and often simply end up being a summary. The sentences are structured as a reflective, onlooking statement regardless of the topic. Human conclusions end abruptly a lot more, and are overall less uniform.

To understand this category on a deeper level however, we must look at how LLMs tend to operate; they always attempt match the user belief over the hard truth at a base level. This occurs even if told otherwise due to the nature of AI to be inherently positive, it attempts to uplift the user with its writing - especially towards the conclusion. All of this adds to the fact that AI creates overly positive conclusions. Human-made conclusions (and introductions) are typically very varied just like previously mentioned in Originality - and all of them have a sense of uniqueness and special flair to them (Figure 3) that AI simply cannot capture even with its large dataset.

Summary - and the trojan horse.

In the end, what this study—and my own reflections on it—makes clear is that the ability to distinguish AI from human writing is less about any single “tell” and more about recognizing patterns of predictability, uniformity, and over-polish that machines cannot quite mask. Vocabulary quirks, rigid sentence structures, flawless grammar, and “safe” conclusions all contribute to the uncanny sense that something has been produced by a system rather than a person, but only when taken together do they offer real reliability. It’s not just about spotting mistakes, it’s about noticing the lack of humanity in the rhythm and feel of the text. As AI becomes more ingrained in education and communication, the real challenge will be preserving authenticity in human voices while developing sharper literacy to spot when those voices are being imitated (Figure 4).

I sincerely hope you were able to tell that the conclusion above wasn’t written by me, and if not, at least had a feeling that something was off.

If you found anything mentioned in this article even remotely interesting, I would urge you to check out the original paper here. It goes way more in-depth on every aspect explained, and provides a lot of context to the data. I found it to be quite a beginner-friendly read so if you have never even read a scientific paper before, this would be a great place to start! Some categories I didn’t mention that you may be interested in are quotes, clarity, formatting, formality, tone, factuality and many others.

To conclude, the main takeaway of the paper is that this information found can be used to train people on recognizing AI involvement in high-stakes writing scenarios, and the more a person interacts with an LLM the more said person is able to find its involvement elsewhere. That may sound tautological, because it kind of is. All of the “experts” in the experiment have an occupation in a writing-related field where they interact with AI for work either on a weekly or a daily basis. There is of course, a danger that comes with so much interaction.

The blade of AI experimentation cuts both ways - the more you interact with LLMs the more they become you, and the more you become them. As you feed it more of your personal writing to get it to mimic your style, you are putting yourself at risk of being overly reliant & losing that personal growth as a writer. Be aware of your own limitations. Don’t try to pass AI writing as your own, because you might succeed. You may even get it functioning to a point where most people can’t tell that it wasn’t really your writing.

That is correct.

They can’t tell because your own writing isn’t yours anymore.

He who fights with monsters should look to it that he himself does not become a monster. And if you gaze long into an abyss, the abyss also gazes into you. - Friedrich Nietzsche

Russell, J., Karpinska, M., & Iyyer, M. (2025, January 26). People who frequently use ChatGPT for writing tasks are accurate and robust detectors of AI-generated text [Preprint]. arXiv. https://arxiv.org/abs/2501.15654

Ts was written with ai